Deep Learning

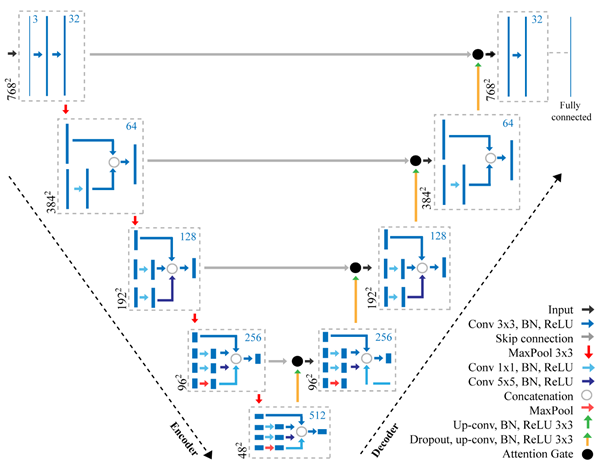

Funding from PhenomUK and BBSRC has allowed me to pursue multiple deep learning projects. These include, but are not limited to, i. the estimation of maximum conductance (gas exchange) in stomata from 2D images, ii. object tracking in dense environments in this instance tracking wheat ears in crop fields to determine oscillation, iii. object detection for various cases for example pollen for counting and iv. feature detection in 3D point clouds. Projects in progress involve deep reinforcement learning to guide active vision, 3D estimation using reduced image sets and deep learning for affordable self-driving autonomous cars in field environments.

Funding from PhenomUK and BBSRC has allowed me to pursue multiple deep learning projects. These include, but are not limited to, i. the estimation of maximum conductance (gas exchange) in stomata from 2D images, ii. object tracking in dense environments in this instance tracking wheat ears in crop fields to determine oscillation, iii. object detection for various cases for example pollen for counting and iv. feature detection in 3D point clouds. Projects in progress involve deep reinforcement learning to guide active vision, 3D estimation using reduced image sets and deep learning for affordable self-driving autonomous cars in field environments.Ray Tracer

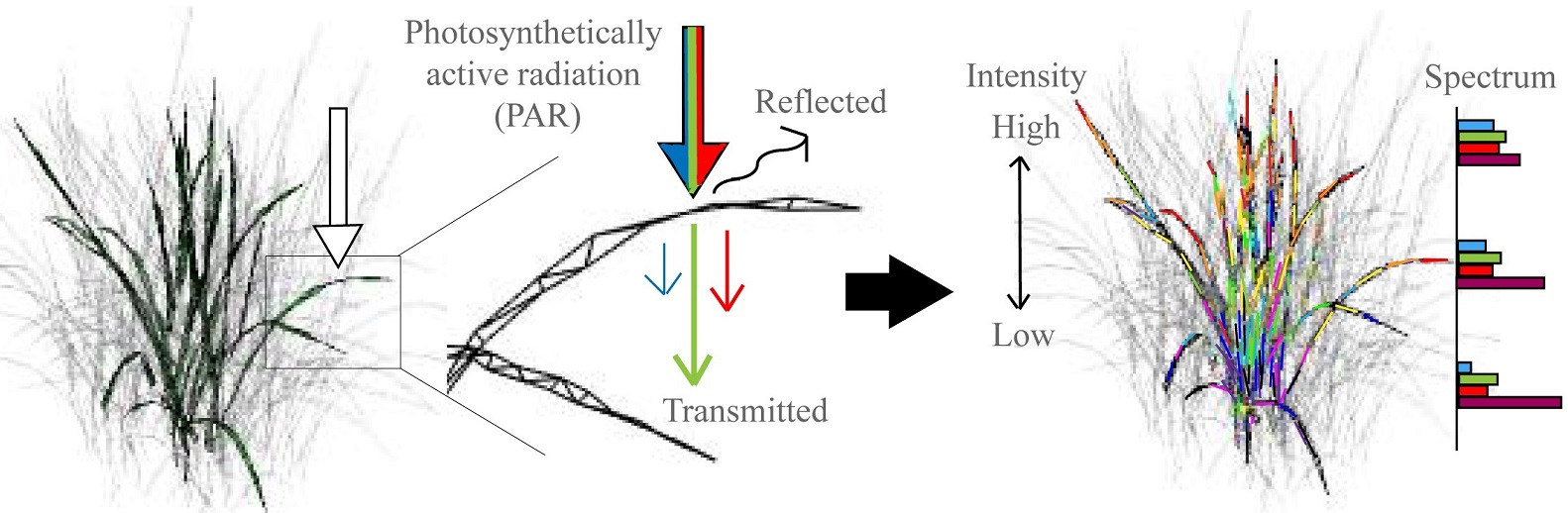

Funded by Gatsby, the aim of this project is to develop a new ray tracer implemented in Python and manipulating multiple GPUS. The ray tracer will be applied to virtual plant models, from my previous projects, to determine how small architectural variations determine the distribution and composition of light, particularly in lower layers of a canopy. The projected rays have several attributes corresponding to spectral composition and intensity. As rays intersect the plant, their attributes will change to reflect the underlying biological processes with portions being reflected or transmitted. The ray tracer will be able to fully characterise the light environment within canopies; accounting for small architectural traits, the light environment in which the plant is grown and the physiological interception of different wavelengths by leaves.

Funded by Gatsby, the aim of this project is to develop a new ray tracer implemented in Python and manipulating multiple GPUS. The ray tracer will be applied to virtual plant models, from my previous projects, to determine how small architectural variations determine the distribution and composition of light, particularly in lower layers of a canopy. The projected rays have several attributes corresponding to spectral composition and intensity. As rays intersect the plant, their attributes will change to reflect the underlying biological processes with portions being reflected or transmitted. The ray tracer will be able to fully characterise the light environment within canopies; accounting for small architectural traits, the light environment in which the plant is grown and the physiological interception of different wavelengths by leaves.3D Reconstruction

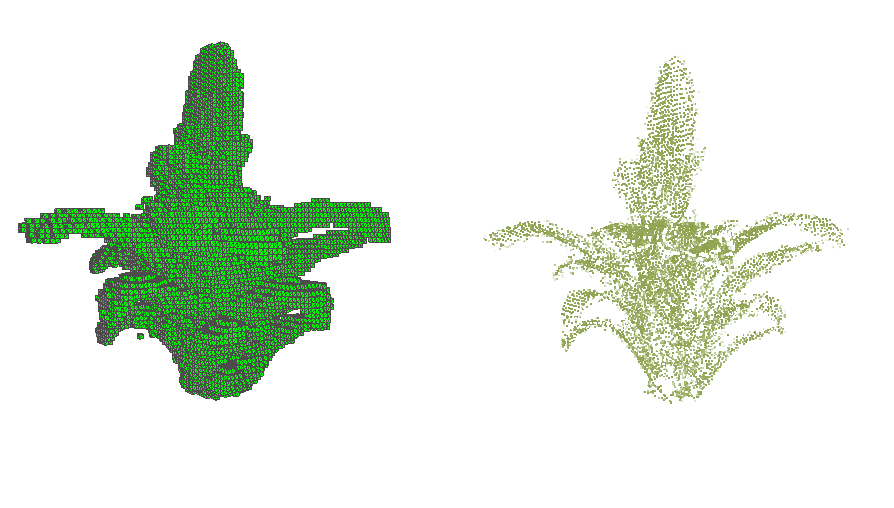

Thanks to funding from BBSRC, EPSRC and PhenomUK, I have over 6 years of experience in developing 3D models with a particular emphasis on plants. Plants are a particularly interesting object to model due to the intricate structure and highly reflective and occluding surfaces, as such making it a complex computer vision problem. Modelling techniques I have applied include, but are not limited to, CT-Scans (Hounsfield, Sutton Bonington Campus), Optical Topography (Metrology Team at The University of Nottingham), Depth Estimation (i.e., Kinect), Volumetric Modelling (2D ray tracing). Structure from Motion (3D point cloud representation from 2D images).

Thanks to funding from BBSRC, EPSRC and PhenomUK, I have over 6 years of experience in developing 3D models with a particular emphasis on plants. Plants are a particularly interesting object to model due to the intricate structure and highly reflective and occluding surfaces, as such making it a complex computer vision problem. Modelling techniques I have applied include, but are not limited to, CT-Scans (Hounsfield, Sutton Bonington Campus), Optical Topography (Metrology Team at The University of Nottingham), Depth Estimation (i.e., Kinect), Volumetric Modelling (2D ray tracing). Structure from Motion (3D point cloud representation from 2D images).The 4D Plant

Funded by BBSRC the 4D Plant projected place emphasis on visual tracking and enhanced 3D reconstruction methods needed to measure and model canopy movement and dynamic photosynthesis in rice and wheat populations. With data collected in both CIMMYT (Mexico, Obregon) and Sutton Bonington (University of Nottingham) the relationship between movement, photosynthetic properties and plant mechanical properties were investigated

Funded by BBSRC the 4D Plant projected place emphasis on visual tracking and enhanced 3D reconstruction methods needed to measure and model canopy movement and dynamic photosynthesis in rice and wheat populations. With data collected in both CIMMYT (Mexico, Obregon) and Sutton Bonington (University of Nottingham) the relationship between movement, photosynthetic properties and plant mechanical properties were investigatedActive Vision

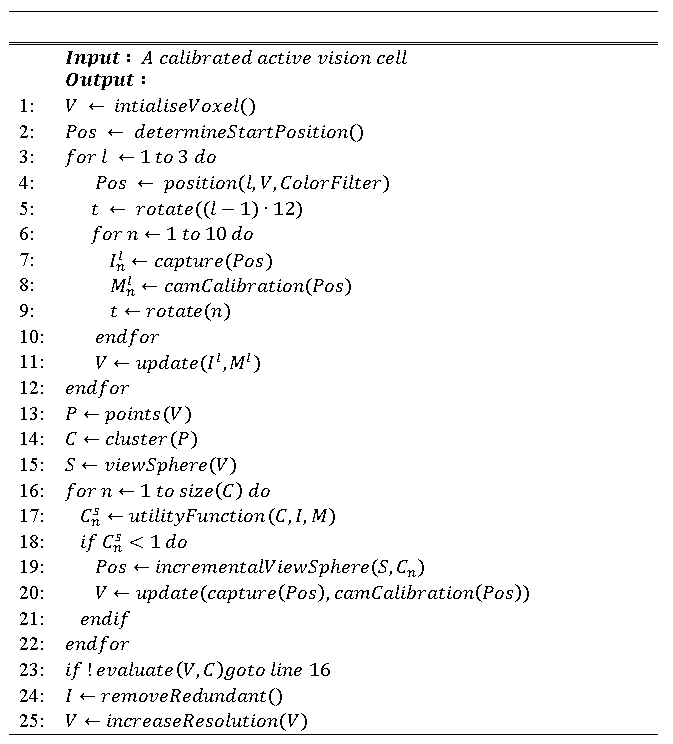

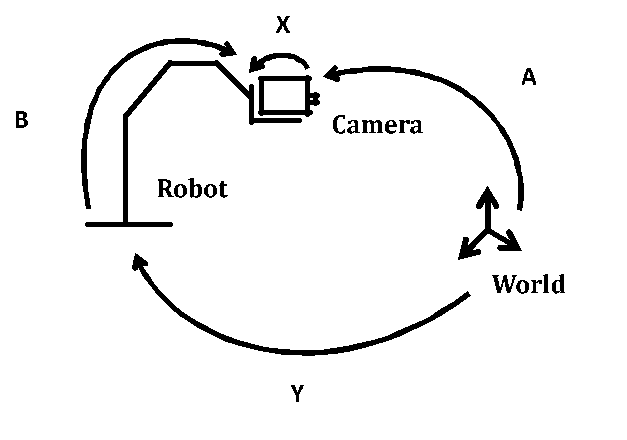

Active vision formed the basis of my PhD in which I used a camera mounted robot arm (The Universal Robot 5) to explore the environment, capturing images of the object and producing 3D models of these. The active vision aspect used simple rules to determine whether the camera should move and where to. Further work explored the ability to clip organs of the plant by selecting a point on the virtual model. More recent works investigate the use of deep reinforcement learning, the combination reinforcement learning and deep learning, to drive the robot improving accuracy and computational efficiency supporting the creation of an incremental 3D model.

Active vision formed the basis of my PhD in which I used a camera mounted robot arm (The Universal Robot 5) to explore the environment, capturing images of the object and producing 3D models of these. The active vision aspect used simple rules to determine whether the camera should move and where to. Further work explored the ability to clip organs of the plant by selecting a point on the virtual model. More recent works investigate the use of deep reinforcement learning, the combination reinforcement learning and deep learning, to drive the robot improving accuracy and computational efficiency supporting the creation of an incremental 3D model.Stomatal Conductance

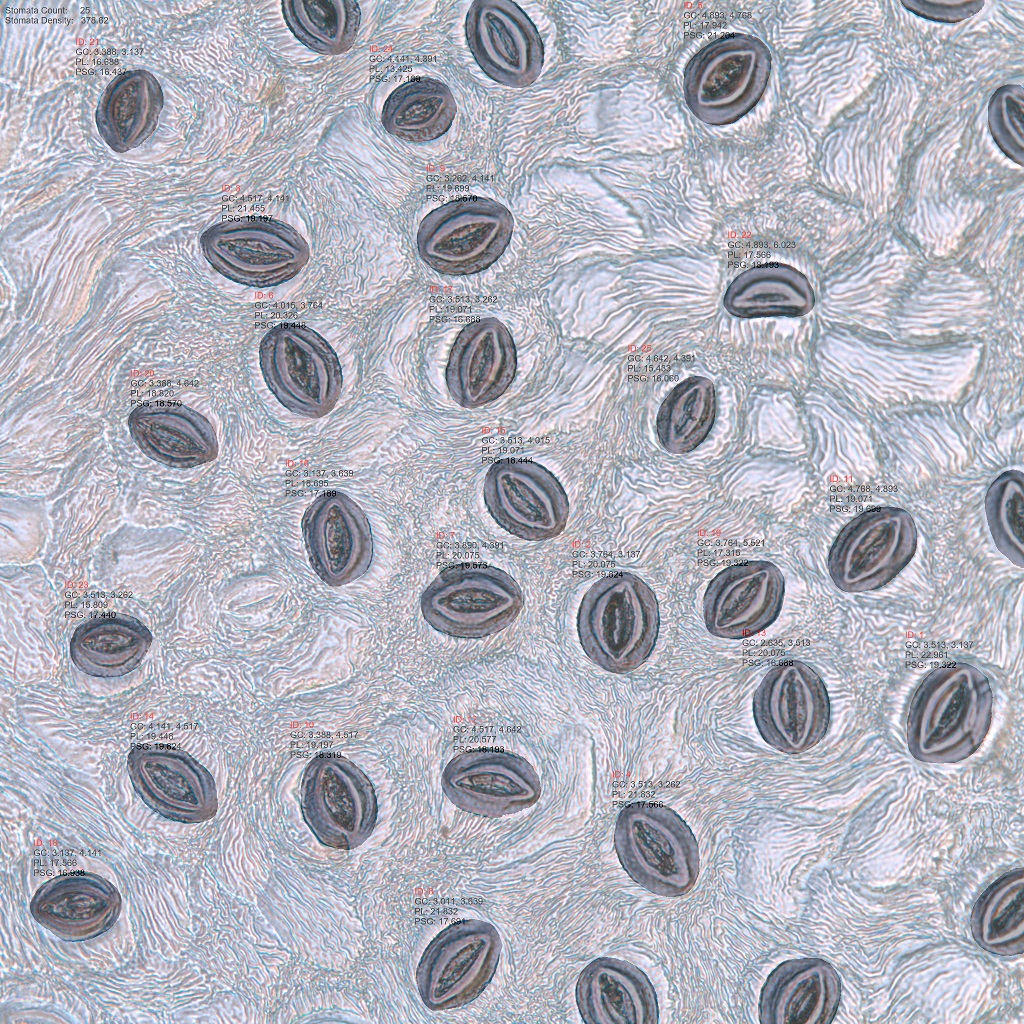

Stomata are integral to plant performance, enabling the exchange of gases between the atmosphere and the plant. The anatomy of stomata influences conductance properties with the maximal conductance rate, gsmax, calculated from density and size. In this project a deep learning network was proposed performing semantic segmentation which provides a rapid and repeatable method for the estimation of anatomical gsmax from microscopic images of leaf impressions. The proposed pipeline achieves accuracy of 100% for the distinction (i.e., monocotyledonous or dicotyledonous) and detection of stomata in both datasets. The automated deep learning-based method gave estimates for gsmax within 3.6% and 1.7% of those values manually calculated from an expert for a wheat and poplar dataset, respectively.

Stomata are integral to plant performance, enabling the exchange of gases between the atmosphere and the plant. The anatomy of stomata influences conductance properties with the maximal conductance rate, gsmax, calculated from density and size. In this project a deep learning network was proposed performing semantic segmentation which provides a rapid and repeatable method for the estimation of anatomical gsmax from microscopic images of leaf impressions. The proposed pipeline achieves accuracy of 100% for the distinction (i.e., monocotyledonous or dicotyledonous) and detection of stomata in both datasets. The automated deep learning-based method gave estimates for gsmax within 3.6% and 1.7% of those values manually calculated from an expert for a wheat and poplar dataset, respectively.Calibration

Calibration is the process of estimating the rotation and transformation of some given object in world space. Many existing algorithms apply for camera and robot calibration, though each are challenging to grasp. The calibration project allowed me to produce and implement algorithms for the calibration of a camera mounted robot arm and turntable. Such was used as the platform for an active vision system to produce accurate and robust 3D models of plants. Active vision improves typical phenotyping setups due to its ability to interact with the surrounding environment thus overcoming static setups. Publications arising from this work can be seen in the publications tab. More recent work, funded by EPPN, involves collaborations with leaders across Europe to identify stronger calibration setups.

Calibration is the process of estimating the rotation and transformation of some given object in world space. Many existing algorithms apply for camera and robot calibration, though each are challenging to grasp. The calibration project allowed me to produce and implement algorithms for the calibration of a camera mounted robot arm and turntable. Such was used as the platform for an active vision system to produce accurate and robust 3D models of plants. Active vision improves typical phenotyping setups due to its ability to interact with the surrounding environment thus overcoming static setups. Publications arising from this work can be seen in the publications tab. More recent work, funded by EPPN, involves collaborations with leaders across Europe to identify stronger calibration setups.